MAHA report on children's health includes fake citations, possibly AI-generated

Last week the government released the "The MAHA report," a lengthy document that attempted to explain the rise of chronic disease in children.

Yesterday, it was discovered that some of the studies cited in the report simply do not exist.

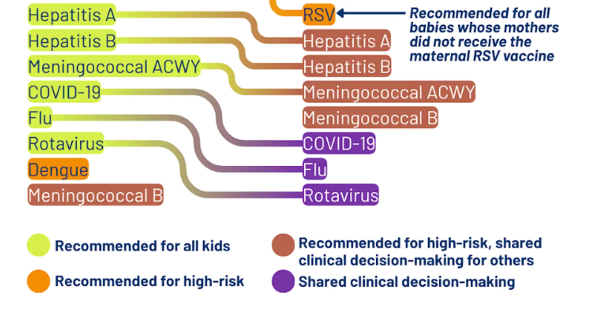

The Make America Healthy Again (MAHA) report suggests chronic disease in children is increasing due to chemical exposures, lack of physical activity, consumption of ultra-processed foods, too many prescription drugs and vaccines, and stress. The report appears to be largely consistent with RFK Jr's preexisting beliefs about the topic.

And there is certainly truth to several of these claims – for example, ultra-processed foods can drive people to overeat and gain weight, and lack of exercise is certainly associated with poor metabolic health. But the report also takes aim at routine medical care, suggesting that commonly prescribed medicines and treatments such as vaccines, ear tubes, antibiotics, and asthma controllers may be harming children.

Citations of studies that never happened

Yesterday, NOTUS reported that several of the citations in the report simply do not exist.

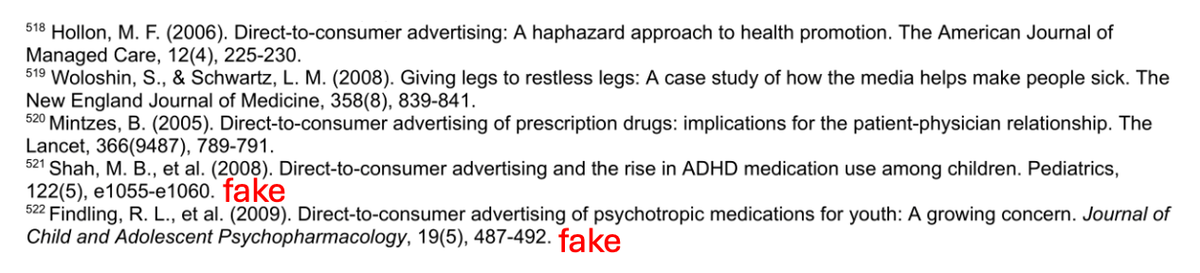

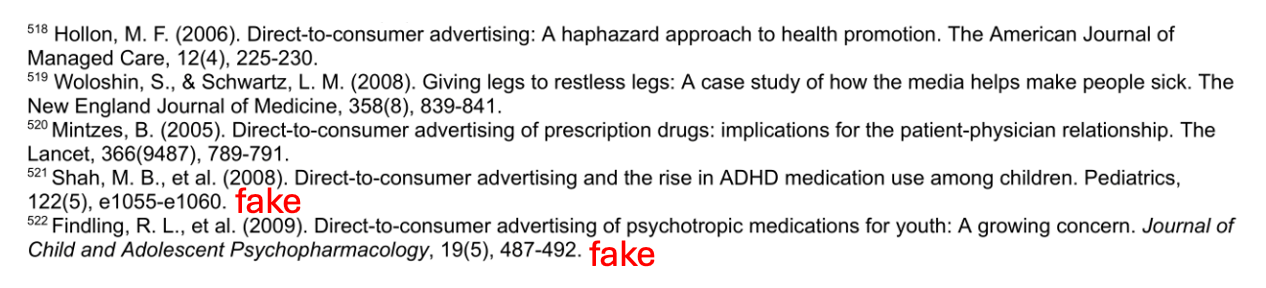

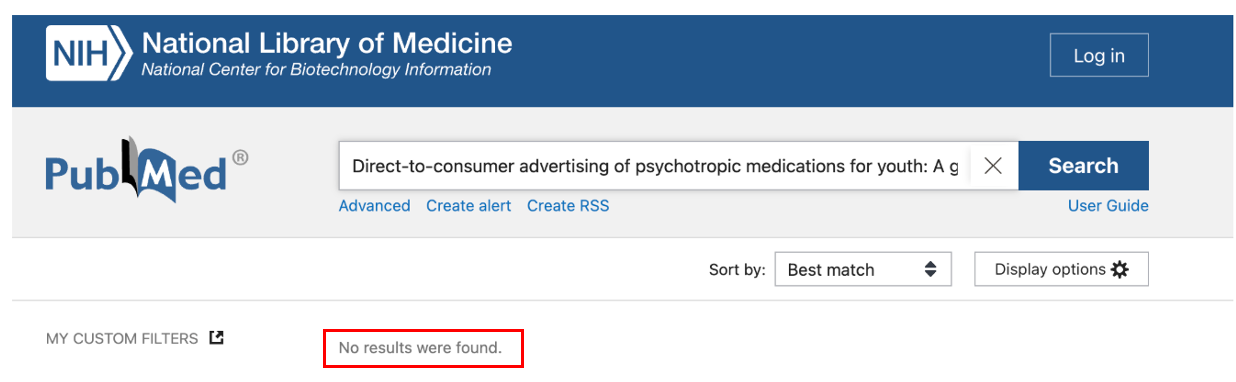

For example, the MAHA report cites a 2009 article by "Findling, R.L." entitled "Direct-to-consumer advertising of psychotropic medications for youth: A growing concern." Dr. Robert L. Findling is a real psychiatric researcher at Virginia Commonwealth University who has published on ADHD.

But he never wrote this article, and no record of an article by this title exists.

Similarly, there is no real paper matching the citation "Direct-to-consumer advertising and the rise in ADHD medication use among children" allegedly published in the journal Pediatrics.

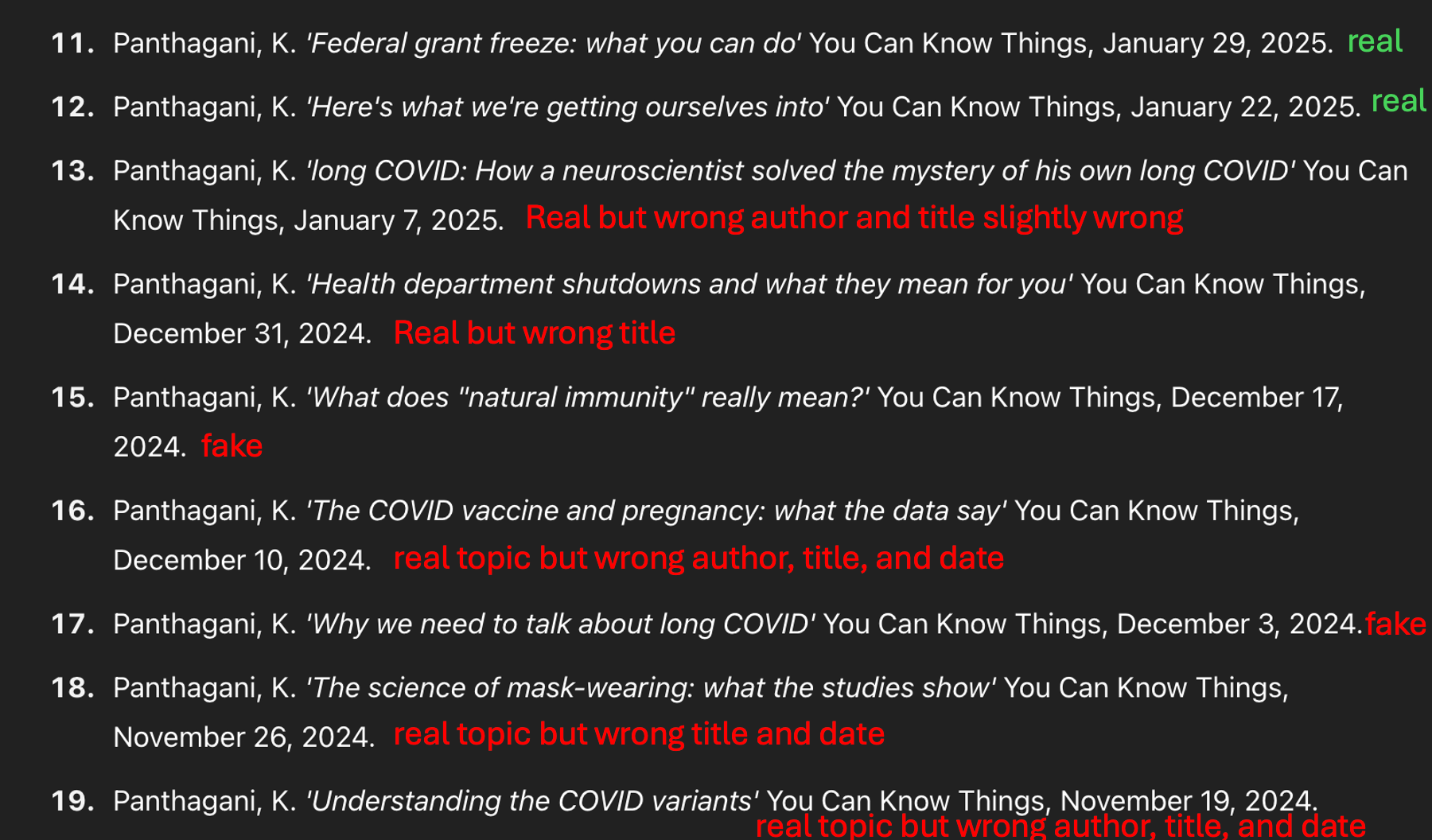

NOTUS highlighted these and several other instances of fake citations, in addition to other errors including incorrect authors and misrepresented conclusions of real studies that were cited.

AI may be to blame

While the most nefarious explanation for these citations is that someone purposefully fabricated them, I think that is unlikely to be the cause. Far more likely is that AI tools like ChatGPT were used to write the report. Yesterday afternoon, the White House called the errors "formatting issues," and did not comment on whether AI was used to write the report. But there is only one type of formatting tool that spontaneously generates fake citations: AI.

AI tools are notorious for "hallucinating" citations – when you ask them a research question, they will often make up content and include fabricated citations (sometimes with real authors and journals in relevant fields) as the source.

I've run into this myself when using ChatGPT. I've asked it to summarize scientific research around a specific question, and while it sometimes cites studies correctly, if there is no data to answer the question it will sometimes make some up for me. This is not helpful.

Even simple citation tasks can be a struggle for AI. I recently asked ChatGPT to make a bibliography for this blog, directing it to the page on my website that has the full list of articles. It got the first few citations right, and then stopped. I told it there were more articles please include them, and it started making up plausible-sounding articles that I did not write.

The problem with chatbot science

AI is an amazing tool that can speed up some of the processes in science. But it cannot perform research, nor can it reliably summarize the current research that exists. It cannot even summarize the titles of studies, let alone assess the quality of the data included. AI wants to please you, and will sometimes give you fake information if you ask it a question it can't answer.

Some of the government cuts are being made with the belief that AI can fill in the roll of human employees. I hope this is the first glaring sign that this will not work in the fields of science and health. AI has amazing potential, but will always need real people who know what they're doing to discern when AI is feeding us BS, as it often does. Otherwise, we're placing our health in the hands of a hallucinating algorithm.

Kristen Panthagani, MD, PhD, is completing a combined emergency medicine residency and research fellowship focusing on health literacy and communication. In her free time, she is the creator of the medical blog You Can Know Things and author of Your Local Epidemiologist’s section on Health (Mis)communication. You can subscribe to her website below or find her on Substack, Instagram, or Bluesky. Views expressed belong to KP, not her employer.